31 May 2025

You can call me Al...

So following Karl’s brilliantly inspirational blog https://mrmict.com/2025/05/29/what-is-computing-2-0/ I thought I should dispel any myths, doubts, misconceptions or confusions to what #LearningToLeadComputing is about.

Firstly, one of the comments or in commonly used teaching terminology, ‘starter’ questions I get frequently asked is “but I thought you worked or only taught Primary?” “Given Karl’s extensive experience and expertise in teaching and leading Primary, #LearningToLeadComputing will be focused on Primary surely?” My own back-story is that when I took early severance from nearly 24 years working in the Civil Service in 2010, I actually wanted to teach Secondary Computing. However, I was warned that my experience of a very corporate style of delivering training courses to adults would not be engaging to teenagers. I therefore spent the year before securing a place on the PGCE programme at Goldsmiths College volunteering at different schools where I fell in love with working with the very youngest learners. So, yes I am like Karl a Primary specialist.

However, in 2020, as the world faced global challenges, my brilliant bosses offered me a very unique role to work across the schools within the Multi Academy Trust that my ‘base’ or ‘home’ school is part of – to support the Secondaries which are part of our family of schools. So since September 2020 I’ve been supporting GCSE and A-Level Computer Science students. For school year 2024-25 that also extended to supporting the KS3 Computing curriculum. Looking back over this time, I’m really pleased and proud of my former students where a number secured their first choice of University or vocational equivalent to continue to study Computer Science and allied subjects. You’ll simply have to buy the book to find out how I’ve been able to transform myself from corporate cardboard to engage, enthuse and enrich Computing at the schools I work for.

But what’s my why and specifically as Karl contemplates in an age where Artificial Intelligence dominates all ideas about the future of Computing. Well, for me, my first experience of what Artificial Intelligence was in 2018 where a family friend was a sub-contractor working for the then newly established part of the Google empire named Google AI (source: https://en.wikipedia.org/wiki/Google_AI, last visited 31 May 2025). He showed me how he had been contracted to write programs and build a database that was able to recognise pictures of cats by essentially labelling photographs that could be accessed from a search of Google Images of cats.

Since then, what I’ve seen of AI is very similar to my teenage years when we were warned of the rise of the robots and how robotics or automation would effectively wipe out the need for work and consequently the labour market or the ability to earn a living wage. Whilst that might have been true for many manufacturing processes, schools, hospitals, supermarket shopping, tourism, international finance and many other industries continue to rely on humans and largely remain unchanged in their fundamental working practices from fifty plus years ago.

What the Information Age or Technological Era has transformed especially with the establishment by Tim Berners Lee of the World Wide Web in 1994 (source: https://www.bbc.co.uk/history/historic_figures/berners_lee_tim.shtml, last visited 31 May 2025) is the ability to share content more widely and rapidly. In creating cloud based computing systems, the global technological giants and social media platforms have effectively created themselves an environment or conditions where by me writing this blog, and you reading it, we’re feeding the algorithms that have been written or created to harvest this information. Whilst this might sound very Orwellian and sinister, this is essentially the way search engines have worked since early biologists established classification systems to record and organise the information they were collecting about our natual world. A piece of writing, image, audio or video recording is given some labels by which the file can be indexed. Web crawlers then process the labels and able to track, trace and record the frequency with which the files are accessed when people ‘search’ for them again on the World Wide Web.

Predictive text writing works in a very similar way. By harvesting all of the typewriting that any of us have saved on a computer or turned into an electronic document, technology has been able to build patterns of phrases that can automatically generate sentences that are grammatically correct. What computing power has enabled is for these processes of collecting and processing information to be much more rapid – the Internet on steroids.

So what are the implications for teaching Computing to children aged 3 to 13 and to those who are eager to pursue a school leaving examination qualification in Computer Science? Early indications from the 2025 Curriculum Review seem to suggest that there may be a greater emphasis on Digital and Media Literacy and focus not just on learning about how digital content is created and shared but to be able to apply critical thinking skills and have the ability to consider for example whether a ‘photograph’ of a slice of cake is real or AI generated image. But it is to go beyond that initial consideration and understand why the image was created and for whose benefit. The ubiquity of the enhanced technologies and systems means that ultimately ‘we’ as a global community of teachers, parents, policy and decision makers need to collectively ensure that every young person we have the privilege and honour of working with have the best opportunity to become digital citizens of the future, able to successfully, safely and securely navigate our ever-evolving online world.

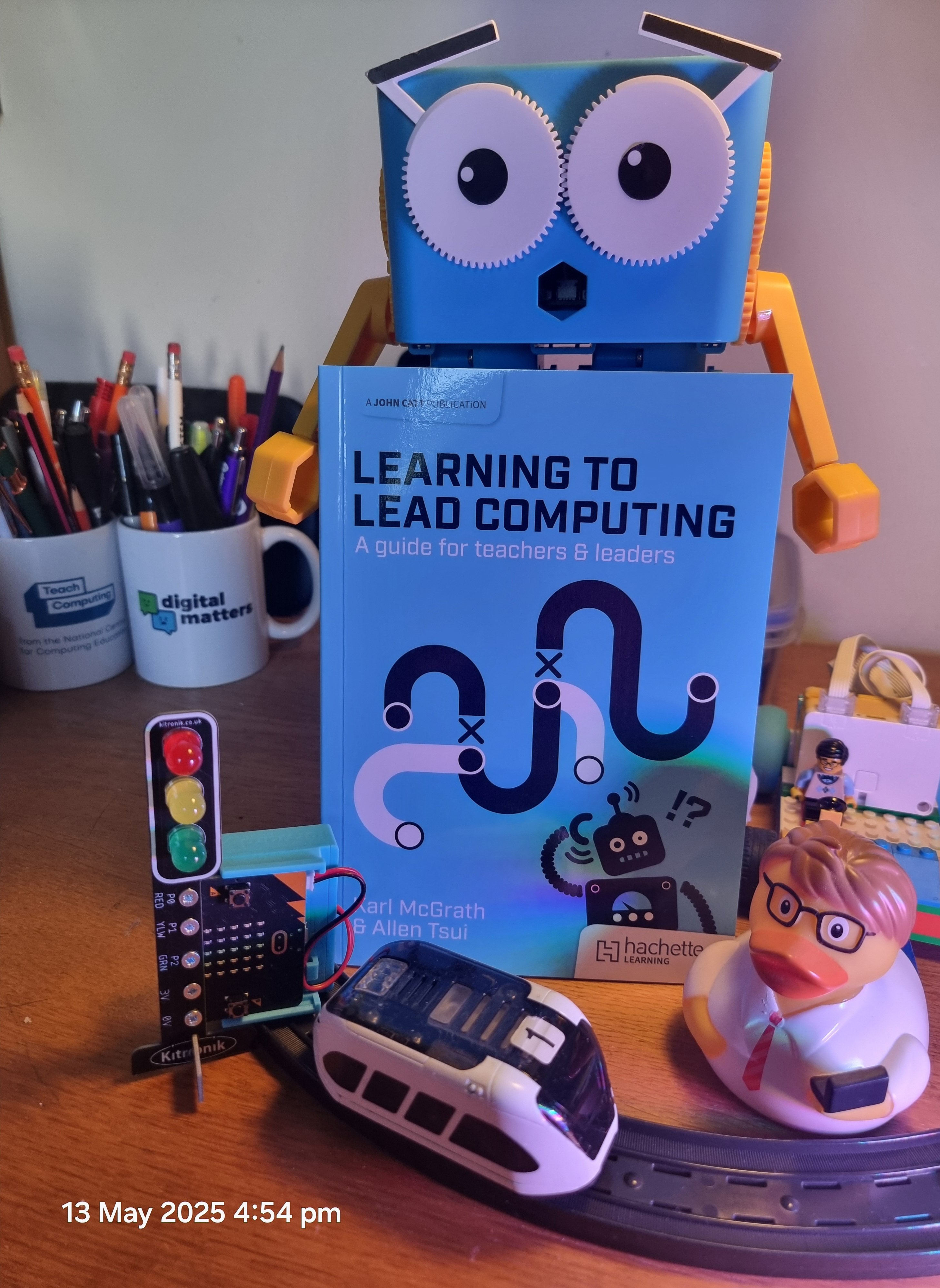

Learning to Lead Computing by Karl McGrath and Allen Tsui is available from in bookshops on High Streets and online.

Discussion

Please login to post a comment