14 May 2022

Artificial Intelligence and the future of coursework

The 15th of May is the deadline for teachers to submit marks for coursework and the build up to that date is a pressure point for teachers and students alike. Coursework used to be a common feature of GCSE and A Level qualifications in the UK but it has now been replaced with exams for many subjects.

However, the skills-based content of vocational computing qualifications and the practical software development aspect of Computer Science courses has meant that coursework has prevailed in our subject.

Whether you love it or loathe it, coursework has some distinct advantages over exams: Creativity is and technical competence is easier to assess in a project than during a formal exam and there’s a strong argument for coursework being more accessible for students with the outcomes being less dependent on students’ performance in a short, highly pressurised exam window.

From my perspective as a teacher of Computer Science and iMedia, I like teaching coursework but I hate marking it. It’s not uncommon for teacher to have to read through hundreds of pages of work for each student knowing that if we miss one accidental (or deliberate) act of plagiarism then we could be accused of maladministration or misconduct.

EduTech assessment solutions have existed for a long time with huge time saving benefits when marking multiple choice questions. Machine Learning now allows AI models to be trained to classify answers to extended-answer questions into different mark bands with arguably more consistency than a roomful of teachers all asked to assess the same project using the same marking criteria. This has huge time and cost saving implications for teachers and exam boards alike. Sadly, new issues of bias would inevitably be introduced due to limitations on the ‘correct’ solutions provided as training data to the machine learning model.

It’s not that hard to train a machine learning model to analyse student work and output a grade. The beauty (or horror) of AI comes when you flip the model back to front and ask AI to generate the content itself. I really enjoy debates in lessons where students get AI to generate artwork based on keywords then discuss the legal and moral implications. I haven’t yet taught a student who’s submitted AI authored coursework but I’m sure it’s only a matter of time.

Detecting plagiarism in coursework is a relatively easy process when students copy and paste content but forget to add a reference to the original source. Every teacher has a sixth sense for detecting content copied and pasted from Wikipedia but when students start using AI to generate essays or parts of their coursework it’s going to be practically impossible to detect. Online essay mills have tempted students into paying someone else to do their work for them for years but AI is a much more significant challenge to academic integrity: anyone can use freely available tools to quickly generate content that will not be flagged by any plagiarism tools. There’s no guarantee that the generated content will be any good and it certainly doesn’t mean that the student has understood what they’ve been asked to write about.

Will AI solve teacher workload issues or create more problems for academic integrity? I’ll let AI speak for itself:

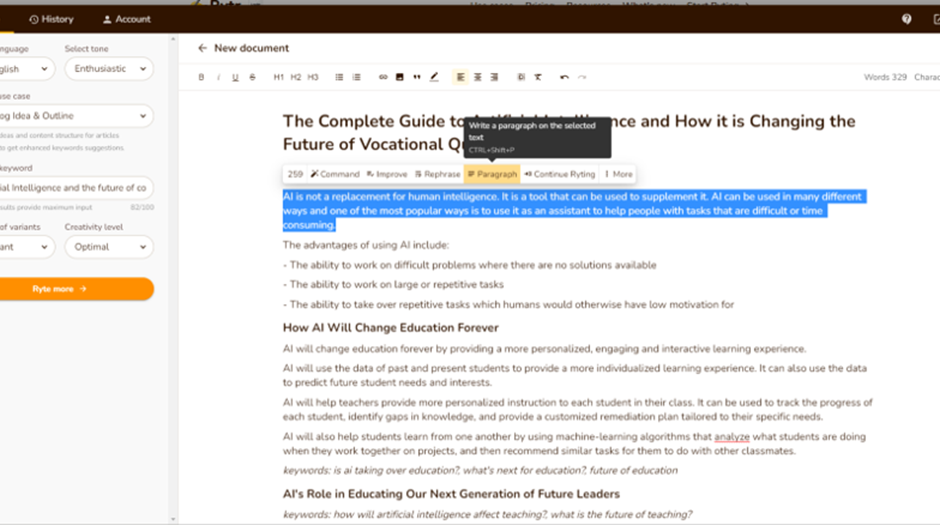

AI is not a replacement for human intelligence. It is a tool that can be used to supplement it. AI can be used in many different ways and one of the most popular ways is to use it as an assistant to help people with tasks that are difficult or time consuming.

In case the robots that end up taking over the world think I’m trying to steal their thunder, I’m happy to give full credit for the quoted paragraph to the OpenAI/GPT-3 model behind https://rytr.me

I’d love to find out what you think: Have you ever had students use AI to try to do their work for them? What part of teaching would you most like to outsource to AI and which bits do you think are safest in human hands for now?

Discussion

Please login to post a comment

Regarding plagiarism, it will soon be hopeless to prevent. just consider Afterword: Some Illustrations | American Academy of Arts and Sciences where Oxford exam questions are answered by GPT-3 (and it costs less than 6 cents to generate an essay at https://beta.openai.com

Regarding AI marking student work consider, for example, this research project at Stanford University that does a great job of providing helpful feedback to programming exercises: https://web.stanford.edu/~cpiech/bio/papers/generativeGrading.pdf

Regarding “‘AI is the name we give to all that stuff we don’t know how to do yet’. Who said that? A chap named Marvin Minsky” see AI effect - Wikipedia that claims Larry Tessler is credited with that. But as you can see from the Wikipedia article many AI researchers don’t think that, but lament that the general public does.

Regarding “There is no formula for figuring out the right number of neurons…” yes but Edison had no idea how make a light bulb other than trial and error. What’s wrong with students doing machine learning by empirically searching for a good model architecture?

This video is brilliant Richard! There’s not many people that can claim to have invented a robot that was chased around the floor by Terry Wogan on his hands and knees on prime time TV!

That’s a really good anecdote! Being provocative, perhaps the assumption that natural intelligence is anything other than the culmination of billions of rolls of dice (or their equivalent random event), is pretty profound, leading to questions of faith and philosophy as well as science and logic. So you’re right - I don’t really know what I’m talking about when I talk about AI! Thanks for such a thoughtful response.

I suspect the musicians are having a similar debate (https://www.musi-co.com)

(https://www.musi-co.com)

I warm to Richard’s “I won’t accept it if you mention AI” approach and i similarly steered pupils away from attempting/including the reference but a thought-provoking article Pete - thanks.

Yes, of course, just like you can wave the AI magic wand over any problem and it is solved for you

Please forgive my sarcasm, Pete, but you did invite me to ‘take the bait’. I am now very anti-AI in general, and anti-AI-in-the-classroom, in particular.

(I was not always thus. This video is now something of an embarrassment to me, but it was 35 years ago, and you might just enjoy it. And, yes, it was the Christian Bale who I shared the green room with).

I agree with the view that ‘AI is the name we give to all that stuff we don’t know how to do yet’. Who said that? A chap named Marvin Minsky - held up to be one of the founding fathers of AI. What he said is particularly true in the context of A-level project proposals. Everything we know how to do can be more precisely defined. I am old enough to remember when basic grammar checking in a word processor was considered ‘AI’, or simple image filtering/analysis techniques, or relevance searching.

‘Neural networks’ is a fancy way of implementing non-linear multiple regression analysis. There is no formula for figuring out the right number of neurons to put in the so-called ‘middle layer’ of a three layer network (which, confusingly, contains just two layers of neurons). Too few and the network won’t learn even to solve the test data; too many and it learns only to solve the test cases - with no generalisation.

Personally, I told my students: ‘If you mention ‘AI’ in your project proposal, I won’t accept it.’ (Well, technically, I couldn’t stop them - but I made it clear they would be on their own with no help from me.) If you know exactly what you intend to do, then say that, and if you don’t then don’t imagine that adding ‘AI’ is going to do anything for you.

Martyn Colliver (who was, maybe still is, chief moderator for AQA) told a great story. A school pupil (not his) proposed to write a Snakes 'n Ladders game for their project. The teacher said that this wouldn’t be A-level standard and to think again. The pupil came back with 'Snakes ‘n Ladders with AI’ and the teacher accepted it! Ask yourself: what does the ‘AI’ responsible for, exactly, in a Snakes 'n Ladders game? Rolling the dice?

Actually, the teacher really had no choice - from the board’s perspective, if the proposal mentions ‘AI’ it is deemed ‘A-level standard’, even if the pupil then makes no attempt to use/write any such thing. IMO this desperately needs reforming in the A-level boards.

And, I used to add, ‘and don’t think that ‘machine learning’ is any more acceptable!’ The difference between ‘AI’ and ‘machine learning’ is largely political rather than technical - especially in this country. It all goes back to the Lighthill report in 1973, which put the cosh on funding for AI research in British universities. Hence Donald Michie (another ex-Bletchley Parker, BTW) - our leading light in the field at that time (he honoured me with a visit around the time of that video) - placed emphasis on ‘machine learning’ instead

[Rant - end]